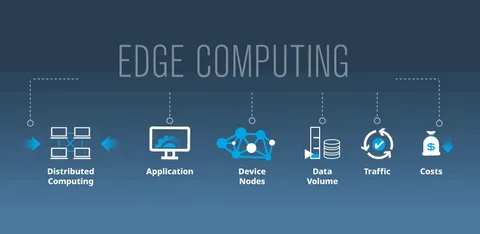

Edge computing moves computation closer to where data is generated, reducing latency for use cases that depend on instant responses. Retail sensors, industrial monitoring, and live video analytics benefit when decisions happen locally instead of waiting for distant servers. This approach also reduces bandwidth costs by filtering data before it reaches centralized systems.

Architecture Tradeoffs

Distributing workloads across devices introduces challenges around consistency, updates, and security. Teams balance local autonomy with centralized governance to avoid configuration drift.

Operations at Scale

Fleet management, remote updates, and observability are essential as node counts grow. Standard telemetry exposes bottlenecks early and simplifies troubleshooting.

Next Steps

Clear service boundaries and testing under constrained conditions prepare systems for real-world variance.